Decoupling Data

Benefits for Digital Transformation

Written by Ian C. Tomlin | 12th January 2024

Decoupling data: What does it mean and why do it? In this article, we explain the reason why so many CIOs have decoupled data architectures and data fabrics front-of-mind in their 2024 investment plans.

“All I want to know is…”

This is how most business intelligence conversations begin.

An employee or manager who wants to ask something new of their data because they are curious.

It does seem rather remarkable that here we are in 2023, and businesspeople still find themselves unable to answer the most fundamental questions about their customers, business, products, and growth performance.

I began my relationship with business intelligence and organizational change some 3 decades ago. In terms of outcomes, I can’t say it’s moved on much.

The problem of making data consumable for analysis has not gone away

Step into any major corporation and you will find humans employed solely to spend over 80% of their time making best use of spreadsheets to analyze data. Of this work, they are likely to spend at least half of their available time gathering, cleansing, normalizing and preparing data to make it useful FOR analysis.

Think too of the departmental and regional heads that spend over 20% of their time producing and presenting reports to more senior executives who could’ve asked their questions directly of the data, had they the means to do so.

You want your team to be curious, to explore new possibilities, understand market opportunity, customer wants, and separate the wood from the trees. But how do you equip them with the means to ask any question they have on their minds without first relenting to opening up a spreadsheet, entering or pasting data, merging columns, de-duping rows, and all the rest of it?

That’s what this article will answer.

AI bots need good data too!

The need for composable data is not peculiar to the subject of data analysis and business intelligence. For processes to be operated by software, those ‘digital agents’ and software applications must be fed with good data too.

Many of the tech industry headlines in 2022 focused on the growing role of artificial intelligence and its use in business to make decisions, supplement human skills, and to process larger amounts of data in shorter periods of time.

Companies want to bridge between their front-office and back-office with automations and software, not human-in-the-loop, hope for the best resourcing. Creating these automations requires data, data, data.

Is it any wonder why so many worthy digital transformation projects fail at the first hurdle, when the data they need to make decisions and action processes is scattered to the four winds?

Why your data is bundled in the first place

Traditionally, most business-critical enterprise data exists in systems of record, and for mature organizations, legacy systems. This data world was for decades, surrounded reassuringly by the protective sheath of a firewall.

Over the past decade, data supply and consumption have expanded exponentially beyond the enterprise boundary with more stakeholders wanting to share data and gain transparency over their services, more use of SaaS apps (built to service tasks independently of other systems), cloud services, and of social media and marketplace platforms like Facebook, LinkedIn, Amazon, Google, and WhatsApp.

In the end, your data is organized by software programs and services that generate the data. I can get a record of the purchase from Amazon and it will stay there forever. When my blood test is taken, it is stored by the provider of the test.

Every fragment of data gets stored into the system that created it.

The end result? Use over 50 SaaS apps and you end up with 50 unique data silos–organized in proprietary structural arrangements–that probably weren’t made for sharing.

The business need for digital decoupling

Every business today must be data-driven to survive. Digital business is generally always on, and customers want transparency in everything they do. The notion of real-time business is upon us; what Bill Gates called ‘business at the speed of light’.

The watchword in boardrooms is AGILITY; being able to switch on and switch off resources according to demands as they happen. All this presupposes people are making decisions at all levels of the enterprise based on facts, not intuition and gut feel.

Imagine a scene 12-months from today. You are sitting in your office, and you know you can ask Siri any question about your business, in the full expectation she has the data to answer your questions. Whilst the AI powered chatbot interfaces exist to achieve this today, the absence of a decoupled architecture means it won’t happen any time soon in most organizations.

While data is being held for ransom in the silos of third-party software vendors, the ability to ask any question you like remains a pipedream.

The way to solve this is to decouple data from the business systems that create and manage it; to form a departmental or enterprise-wide data fabric layer of pre-gathered, pre-cleansed, and fully composable data. This way, data can remain completely autonomous from systems, and multiple connected services can be then added to serve up data from this ‘clean’ repository as needed.

DIGITAL DOCUMENTS REMASTERED

Micro-Portals • Forms • Reports • Training Dashboards • Charts • Maps • Tables Checklists • Onboarding • Risk Registers • Presentations • eBooks

The need for a decoupled data architecture

Data value is all about relationships and context.

Take for example a customer record from your CRM system. It means so much more when you can explore the financial data that exists on the same customer, or the service record that exists on your service management meeting. This customer record probably discloses further insights when you see what data exists about this customer on Google, Amazon and Facebook. And, if you can take the mobile phone data from call records and contact centre feeds, more so.

No system in its whole self can uncover all the secrets of the data it holds. To maximize your customer data, financial, data, training data, sales pipeline and performance data… you need to compare it to everything else in its biosphere.

Even when you create a system-specific reporting layer, it’s likely you will want to harvest contextual data from other third-party systems and present views for different stakeholder interest groups in different ways. The report tooling supplied by SaaS vendors is bluntly too primitive to service these needs, understandably because this requirement falls outside the scope of their own systems’ reporting capability.

Surprisingly, you would expect the problem of data integrity and organization only really exists when you look across multiple third-party systems. In fact, many organizations that operate software from THE SAME supplier can equally find their data repositories hold inconsistent data. This is because each operation will implement solutions in different ways.

Equally, the way teams use systems will vary according to local cultures and behavioral norms. This means a system designed in precisely the same format as another might still surface different data results (for instance, one team might use the ‘Customer’ field to identify a customer while another uses a Customer Code).

Relentless departmental reporting requests create demands for multiple connected services to exist as a perpetual state. This is driving IT requirements for an enterprise-wide and autonomous data fabric layer. When ad-hoc solutions are created to serve discrete projects that evolve independently, the cost and risk to the business are amplified exponentially.

Composable data assets

Coined by Gartner, the term “composable enterprise” first appeared in 2021 and is widely used today to describe a modular approach to digital service delivery and software development. In other words, a plug-in-play application architecture whereby the various components can be easily configured and reconfigured.

A key element of this architecture is the separation of the data layer from the application layer, fostering greater re-usability of both.

A successful digital transformation requires decoupling the data layer from legacy IT so that companies aren’t forced to modernize their enterprise resource planning systems all at once—an expensive, time-consuming, and risky proposition. Companies that implement data and digital platforms—separating the data layer from legacy IT—can scale up new digital services faster, while upgrading their core IT. Source BCG

Critical decoupling architecture objectives

Organizations that adopt a data fabric and decoupled architecture will focus on the following priorities, to:

• Liberate data from old, inflexible legacy core systems

• Transfer the ownership of data from IT to the business

• Bring data transparency to create a curious, learn fast/fail fast, data-driven decisioning culture

• Speed time to value of new projects and digital services

Where we are today

Overlooking the obvious problem

In recent years, IT investment decisions have flowed down to departmental leaders who don’t see data quality or provisioning as a priority. Therefore, most organizational IT decision-makers have sought to overlook and avoid the need to invest in a data fabric that offers the ‘ready-to-use’ composable data needed to answer successive new what-if questions and power new systems and automation.

The obvious alternative (for department heads at least) is to employ more roles in data analytics and manually crank out data as and when it is needed.

These point-specific solutions inevitably lead to delays in projects and inefficiencies. Furthermore, every time a new requirement emerges for a different blend of data, it’s unclear whether the data relationships exist to combine data in the desired way.

In consequence, through this fragmentation of IT procurement and decisioning–and in some cases, the absence of a firm central hand to guide technology architectures–firms are supersizing their project risk, living with project delays, slowing their ability to answer new questions, and settling for a ‘business as usual’ cost to manually data crunching.

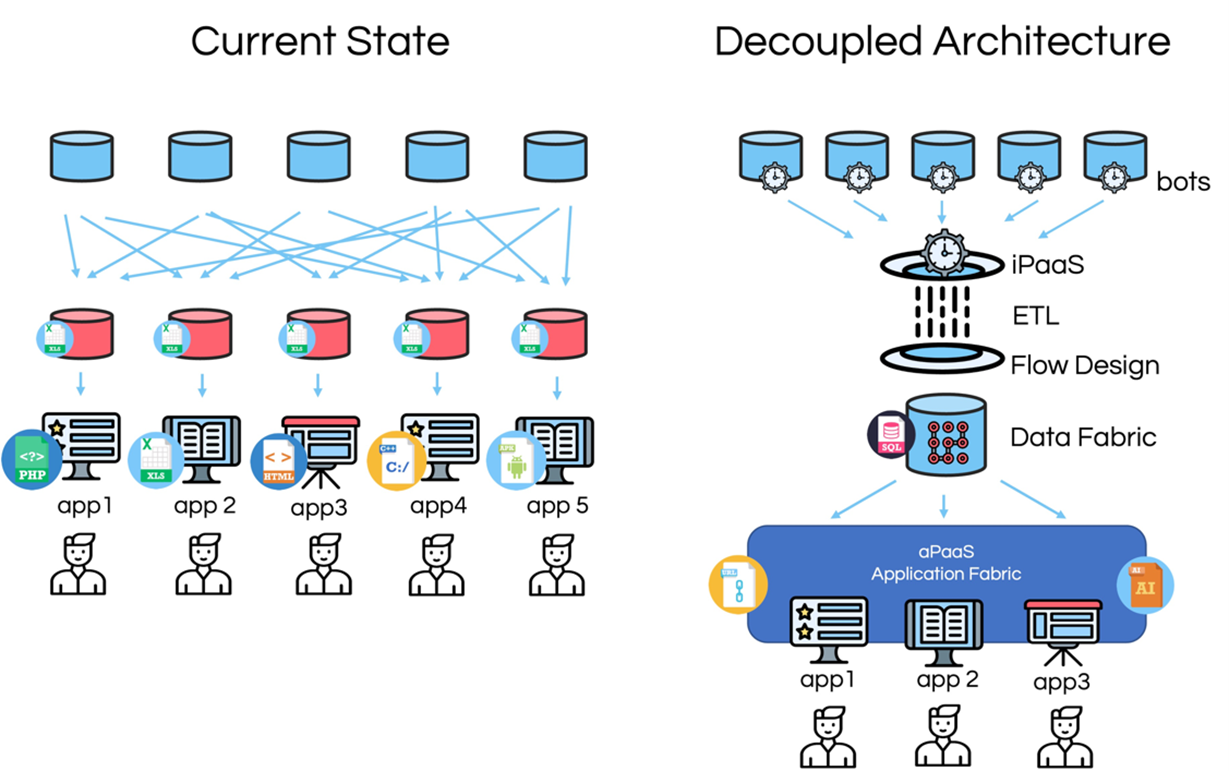

What a decoupling architecture looks like

There are common technology building blocks to decoupling architecture solutions:

Data harvesting and organization components

- Infrastructure as a service and cloud-native provisioning to negate the use of poorly utilized in-house server infrastructure.

- Data Mashups and Software Bots to augment data feed information flows using upload templates, watch folders, scheduled events, etc. to harvest data from existing systems and data sources.

- Extract, Transform and Load (ETL) tooling, often powered by fuzzy logic and AI, to cleanse, normalize, enrich and organize data.

- Database systems design and provisioning to create and organize relational and flat file databases to maximize data relationships and reuse.

- Infrastructure Platform-as-a-Service and codeless data connectors to connect reporting systems to legacy systems and other data sources without having to code an interface.

Application components

- Application Platform-as-a-Service (aPaaS) – to provision services in support of the design, deployment, and operation of software applications.

- Application Fabric – A cloud platform to manage the publishing and organization of large numbers of discrete software applications used by digital workers to consume data.

- Cloud infrastructure services – A cloud platform to administer cloud infrastructural deployments, data security, replication and scaling.

- Cloud-native clustered deployments of secure private clouds at scale – A cloud platform service used to provision clustered private cloud deployments, thereby removing the need for administrators to log in to successive discrete sessions when supporting multiples of private clouds (something that often happens when businesses operate sales channels and supply chains).

Service delivery components

- Integration with popular desktop and reporting tools

- Reporting services to publish dashboards, charts, and reports

- Information flow design tooling to create email/SMS alerts and notifications

- Low-code/No-code/Codeless applications design and publishing services (to build apps needed to implement changes to processes resulting from

- Digital documents to democratize data use and consumption

- AI chatbot human interfaces, so digital workers can ask questions

Examples of decoupled architecture data use cases

Applications for decoupling span the enterprise, although justifications for projects can originate at a departmental level, as illustrated by the examples below.

Managing growth performance across a sales territory

The sales division of a global electronics company responsible for the Middle Eastern, Eastern Europe and African markets was being hampered by a shortfall in sales insights as the result of its widespread data silos.

This meant the data-gathering process was time and effort intense. Managers were presented with copious data but no actionable insights or recommendations for action.

To resolve it, a data fabric was created across the regional sales platforms and ERP data repositories that could deliver timely actionable insights to stakeholders on demand. Read the full case story.

Creating a Customer Data Platform (CDP) to focus operations toward profitable business

The management team of a progressive Office Equipment and technology business in Europe identified the need to become ‘data-driven. The sales leadership wanted to create a single view of its customers to focus sales efforts on the most profitable opportunities and automate delivery processes. Read the full case story here

Scanning the market horizon, and matching resources to opportunities

Power and Energy is a fast-changing market. In the professional services industry, becoming adept at surfacing new advisory opportunities–also knowing what advisory services to offer and how to resource them–is critical to success. Find out how one global advisory firm used a decoupling architecture to gain a competitive advantage.

Final thoughts

Overlooking the obvious problem

1. Digital decoupling is a must-have for any business that wants to optimize its ability to be data-driven, foster a culture of curiosity, and answer new questions cost-effectively.

2. The success and time to value of digital transformations–and its substrates like hyper-automation, blockchain markets, customer self-service, etc. have become increasingly dependent on accessibility to a decoupled architecture that makes data composable through a coherent and useful data fabric. Trying to ignore or navigate around the data bundling problem to short-cut on delivery costs almost inevitably results in the reverse.

3. A decoupled architecture is simpler to achieve today thanks to advanced iPaaS/aPaaS codeless platforms like Encanvas that serve up all the necessary building blocks of data ETL, software bots, data mashup, data fabric, app fabric, fuzzy logic matching and bridging, digital document democratization and clustered private-cloud deployment.

DIGITAL DOCUMENTS REMASTERED

Micro-Portals • Forms • Reports • Training Dashboards • Charts • Maps • Tables Checklists • Onboarding • Risk Registers • Presentations • eBooks

Related content

Building enterprise software

Building enterprise software has never been easier thanks to advances in cloud Low-Code, No-Code, and Codeless aPaaS applications platforms.

Deliver small and wide data with digital documents

How digital documents deliver small and wide data, allowing data-driven enterprises to optimize their business models and efficiency,

Digital Data Fabric

In this article, find out about our enterprise digital data fabric platform, what it is and why your business needs one.